Speaker

Description

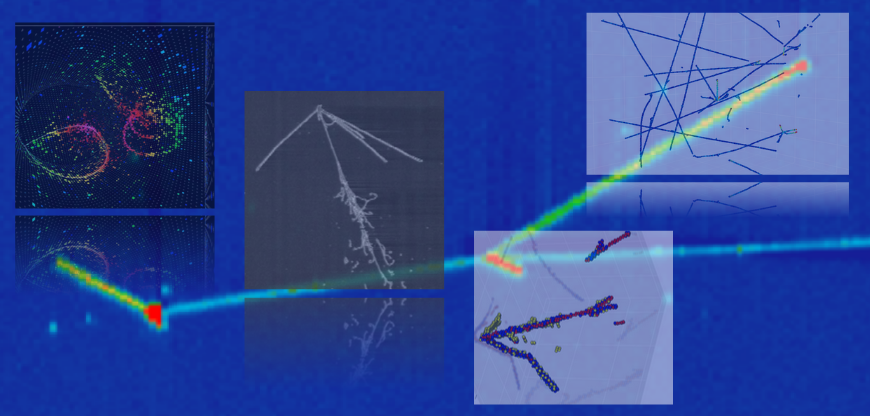

Deep learning models for reconstruction in LArTPC neutrino data have become ubiquitous and have been successfully applied to real analyses. These models usually have to be trained from scratch depending on the task and do not take into account symmetries or systematic uncertainties.

Following advances in contrastive learning we show how such a method can be applied to sparse 3D LArTPC data, by demonstrating it on the PILArNet open dataset. The contrastive learning framework allows us to extract representations from the data that are invariant to underlying symmetries and systematic uncertainties. Using contrastive learning, we pretrain a sparse submanifold convolutional model based on ConvNeXT v2. We showcase the flexibility and efficacy of the method by fine-tuning the pretrained model on classification and semantic segmentation tasks, showing it surpasses conventional deep learning methods.